Donsker’s Theorem, a cornerstone in the field of probability theory, demonstrates how many random processes converge to Brownian motion, revealing powerful insights into weak convergence and subsequences. Understanding this theorem is crucial for researchers and practitioners in statistics, finance, and machine learning, as it bridges theoretical concepts with practical applications. Have you ever wondered how seemingly random phenomena can exhibit predictable patterns? Donsker’s Theorem not only assists in deciphering these complexities but also lays the groundwork for advanced statistical methods. By exploring the theorem, you’ll uncover its implications on distributional limits and how subsequences can behave consistently within varied scenarios. Join us in diving deeper into this fascinating subject to enhance your understanding and application of probability in real-world contexts.

Understanding Donsker’s Theorem in Probability Theory

Understanding Donsker’s theorem offers a fascinating glimpse into the mechanics of probability theory, particularly how random processes converge. Imagine you’re exploring a series of random walks. As you observe the paths of these walks at scale, you realize that, under certain conditions, they start to resemble a familiar and elegant structure: Brownian motion. This is precisely where Donsker’s theorem comes into play, bridging the connection between discrete stochastic processes and their continuous counterparts.

At its core, Donsker’s theorem provides a formal framework describing the convergence in distribution of a normalized random walk to a Brownian motion. This concept is known as weak convergence, a statistical norm where a sequence of probability measures converges to another probability measure. In practical terms, if you look at the cumulative distribution functions of normalized sums of independent identically distributed (i.i.d.) random variables, this theorem assures you that these functions will converge to that of a Brownian motion as the number of steps increases. This enables statisticians and researchers to model complex systems more effectively and understand the asymptotic behavior of different stochastic processes.

Theoretical Underpinnings

Understanding the conditions under which Donsker’s theorem holds is vital for its application. The theorem emphasizes the significance of subsequences in finding convergence; it highlights that proving convergence for a sequence often involves demonstrating it holds for particular subsequences. This attention to subsequences aligns with the broader theme in probability theory where, often, convergence in distribution can be shown through selective examination of subsets of random variables or processes.

The implications of Donsker’s theorem are profound. It elegantly links the seemingly chaotic nature of random walks to the smooth, continuous behavior of Brownian motion, providing a powerful tool for analysis in various fields, including finance, physics, and biology. Any scenario involving large numbers of independent random variables might leverage this theorem, guiding the prediction of overall outcomes based on individual behaviors. In short, Donsker’s theorem not only enriches the field of probability but serves as a bridge connecting discrete events to continuous phenomena, revolutionizing how statisticians and mathematicians understand randomness.

The Concept of Weak Convergence Explained

The beauty of weak convergence lies in its ability to capture the essence of randomness without the need for strict convergence. Think of throwing a die repeatedly. Each roll represents a random variable, and while the outcomes can seem chaotic, weak convergence allows us to look at the overall distribution as we consider more and more rolls. Eventually, as the number of rolls approaches infinity, the strength of the individual outcomes fades into a coherent picture-the distribution of outcomes resembles a smooth function, one that can often be described by well-known forms, such as the normal distribution.

Weak convergence, formally speaking, refers to the convergence of a sequence of probability measures to a limiting probability measure. More explicitly, if we have a sequence of random variables (X_n) that converges in distribution to a random variable (X), we denote this as (X_n xrightarrow{d} X). This means that the cumulative distribution functions (CDFs) of (X_n) converge to the CDF of (X) at all continuity points of (X)’s distribution. This convergence is not as stringent as convergence in probability; rather, it recognizes that while particular realizations of random variables may fluctuate wildly, the distributions they generate can stabilize in a predictable way.

Why It Matters

This concept is crucial in many areas of statistics and particular cases where sums of random variables are involved. For example, the Central Limit Theorem (CLT) is a cornerstone of probability theory showing that, under certain conditions, the normalized sum of a large number of i.i.d. random variables will converge in distribution to a normal distribution. Donsker’s theorem generalizes this idea, showing that sequences of random walks, when appropriately normalized, converge to Brownian motion. This transition from discrete steps to a continuous model provides a powerful framework for understanding complex systems modeled by stochastic processes.

Understanding weak convergence equips researchers and practitioners alike with the tools to analyze phenomena in fields such as finance, insurance, and environmental science. When dealing with large datasets or complex systems, it allows statisticians to make inferences about the behavior of a system based on the observed relationships among its components. By framing problems in terms of distributions rather than individual outcomes, weak convergence acts as a bridge connecting theoretical probability with practical application, enhancing our ability to predict, analyze, and respond to randomness in various domains.

Importance of Subsequences in Donsker’s Theorem

The relevance of subsequences in Donsker’s Theorem emerges from the way they can simplify the analysis of weak convergence. In various mathematical contexts, handling entire sequences might become unwieldy, particularly when considering convergence properties. By focusing on subsequences, which are derived from the original sequence, one can pinpoint particular behavior or characteristics that aid in the convergence analysis. This approach is not just a matter of convenience; it underscores the intricate relationship between different subsets of data points within larger datasets, guiding researchers toward meaningful interpretations.

To understand this importance more deeply, consider that subsequences often retain the essential features of the original sequence while discarding noise or aberrant behaviors. In the context of Donsker’s Theorem, which establishes the convergence of normalized random walks to Brownian motion, examining appropriate subsequences can help clarify the limiting behaviors that characterize this transition. For example, if you are analyzing a random walk, you may choose subsequences aligned with certain times or conditions, allowing for a more straightforward demonstration of convergence in distribution.

Additionally, subsequences serve as a crucial tool when verifying the conditions necessary for applying Donsker’s Theorem. In many statistical applications, it may be challenging to check the convergence of the entire sequence. By isolating subsequences, researchers can often determine whether the necessary criteria-such as tightness-hold true for these smaller segments. Consequently, if a subsequence converges weakly to a limit, it provides important implications for the convergence of the full sequence, thus facilitating a more streamlined analytical process while bolstering the robustness of conclusions drawn from the data.

In practical scenarios, this can be particularly powerful. For instance, when dealing with time series data, analysts can identify specific segments of data (subsequences) that are representative of broader patterns. By focusing on these sections, they can capture moments of weak convergence more effectively, allowing for more precise predictions and insights. The interplay of subsequences and weak convergence ultimately enriches the analysis by allowing statisticians and researchers to draw clearer conclusions about underlying distributions, reinforcing the applicability of Donsker’s Theorem in real-world scenarios where randomness plays a significant role.

Key Applications of Donsker’s Theorem in Statistics

Donsker’s Theorem serves as a cornerstone in the field of probability and statistics, particularly when it comes to understanding the convergence behavior of stochastic processes. The theorem essentially provides a framework for connecting discrete random walks with the continuous path of Brownian motion, making it an invaluable tool in various statistical applications. This transformation from discrete to continuous is not just a theoretical curiosity; it has practical implications that extend across diverse fields including finance, ecology, and machine learning.

One of the most direct applications of Donsker’s Theorem is in financial modeling, where it is used to derive option pricing models under stochastic calculus. For instance, when modeling stock prices as a random walk, the theorem allows practitioners to assume that the normalized prices will converge in distribution to a Brownian motion. This connection simplifies the mathematical analysis of various financial derivatives, making it easier to implement pricing strategies and risk management techniques. The use of Donsker’s Theorem helps to justify the assumptions underpinning models like the Black-Scholes framework, providing a solid mathematical foundation for traders and analysts.

Applications in Statistics and Beyond

Donsker’s Theorem also plays a critical role in statistical inference, particularly in the formulation of non-parametric tests. For example, in bootstrapping techniques-where random samples are repeatedly drawn from an empirical distribution-researchers can utilize the convergence properties guaranteed by Donsker’s Theorem. This enables statisticians to derive confidence intervals and hypothesis tests without relying on strict parametric assumptions about the underlying data distribution, thus broadening the applicability of statistical methods.

Furthermore, in the realm of machine learning, Donsker’s Theorem can inform the development of algorithms that rely on sampling methods, such as Monte Carlo simulations. The convergence of empirical processes to Brownian motion means that practitioners can leverage this property to optimize the design and analysis of random algorithms, enhancing performance and reliability. It helps in understanding how uncertainties propagate through these algorithms, thus providing a theoretical basis for improving machine learning models.

In summary, the applications of Donsker’s Theorem extend well beyond the realm of pure mathematics. Its insights into the relationship between discrete and continuous processes facilitate more robust statistical methodologies, inform financial models, and enhance the analytics of machine learning. By grounding practical applications in the rich theoretical framework provided by weak convergence, practitioners can navigate complexities in various domains with greater confidence and precision.

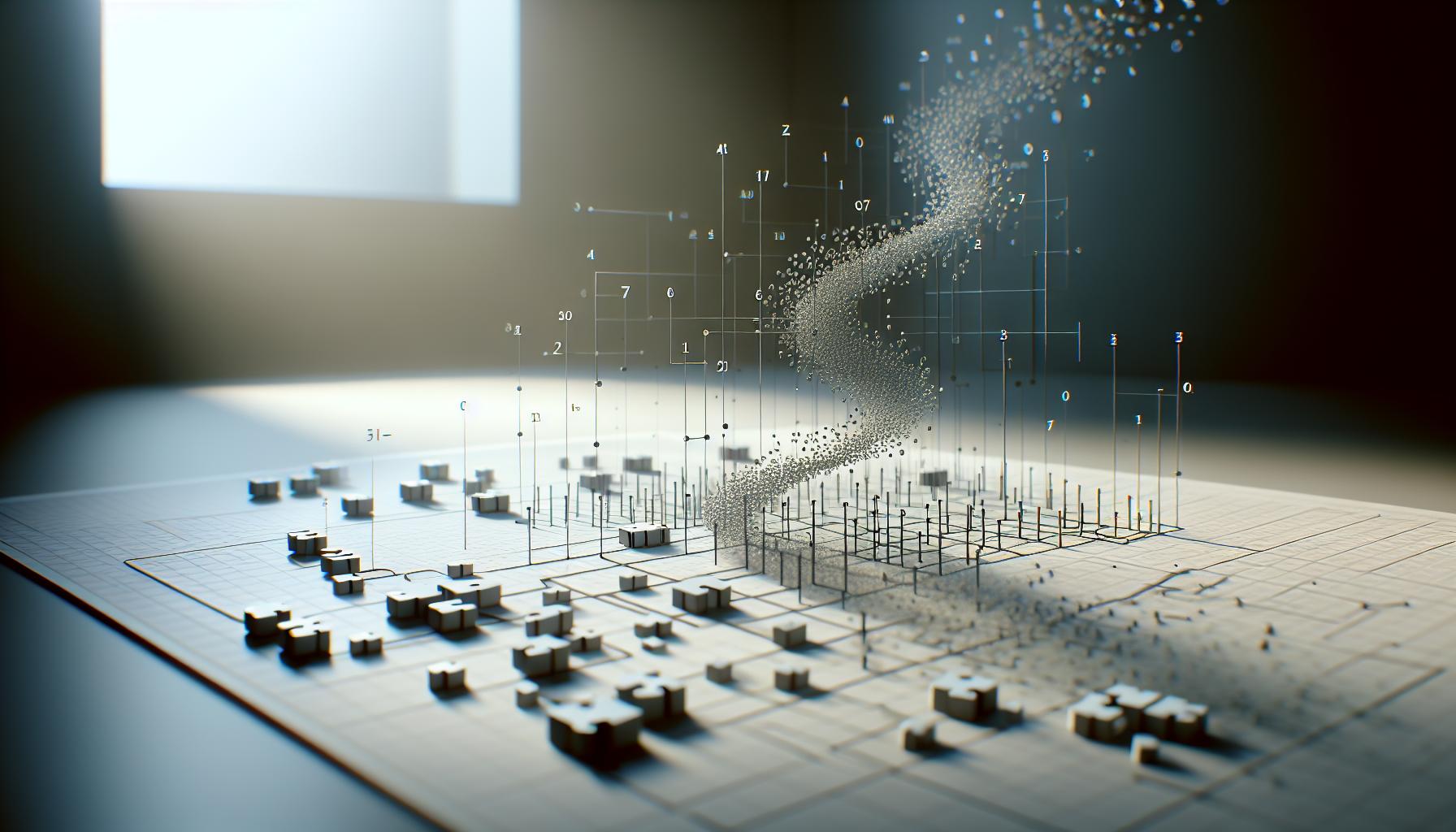

Visualizing Weak Convergence with Diagrams

To grasp the concept of weak convergence effectively, visualizations play a vital role. Imagine plotting the paths of random walks overlaid on a graph. While the individual steps of these walks can appear erratic, as the number of steps increases, the collective behavior begins to reveal a more coherent form. This is where the beauty of weak convergence becomes evident: as the sample paths tend toward the continuous nature of Brownian motion, you start to see that they are not just random noise, but rather a process that approaches a defined limit.

To illustrate weak convergence visually, consider a sequence of empirical distribution functions generated from random samples of a real-valued random variable. Plotting each empirical distribution on the same set of axes allows for an immediate comparison. Notably, as the sample size grows, the empirical distributions converge to a smooth curve reflecting the underlying true distribution. This convergence can be highlighted through a series of diagrams that demonstrate how the empirical functions become indistinguishable from the target distribution, visually capturing the essence of Donsker’s Theorem.

Key Elements of Visualization

- Graphs of Stochastic Processes: Show several realizations (sample paths) of a stochastic process converging to a Brownian path. Highlight how variability diminishes as sample size increases.

- Empirical vs. True Distributions: Create a series of plots that illustrate the convergence of empirical distribution functions to a true distribution, emphasizing the role of sample size.

- Step-wise Convergence: Design a sequence of diagrams leading from a discrete random walk to continuous paths, demonstrating each stage of convergence.

Incorporating these visual elements not only enhances understanding but also bridges the gap between complex theoretical ideas and tangible examples. When students and researchers visually observe the convergence process, they internalize the behavior of random processes, making the concept of weak convergence more intuitive and relatable. Such visualizations empower them to appreciate the profound implications of Donsker’s Theorem in various domains, including statistics, finance, and machine learning.

Real-World Examples Illustrating Weak Convergence

Exploring real-world scenarios that illustrate weak convergence highlights its practical significance across various fields. One compelling example comes from finance, where asset prices can be modeled as stochastic processes. As investors analyze daily returns from financial instruments, they often utilize the Central Limit Theorem (CLT) to assess the distribution of returns. In a scenario where an investor samples daily returns from a stock over several months, the empirical distribution of these returns may converge to a normal distribution as the sample size increases. This connection between empirical distributions and theoretical limits exemplifies the essence of Donsker’s Theorem, showcasing how weak convergence relates concrete data to established probabilistic models.

Another notable application is found in environmental science, particularly in the study of climate data. Consider temperature measurements taken at various locations over time. Analyzing these measurements often involves creating empirical distributions that reflect seasonal temperatures. As more data is collected across years, researchers observe that the empirical distribution of yearly average temperatures begins to converge weakly to a specific distribution-possibly a Gaussian distribution representing the long-term climate averages. This process not only aids in understanding trends over time but also informs policy decisions regarding climate action by utilizing statistical tools grounded in weak convergence theory.

The convergence of empirical measures can also be illustrated in the context of sports statistics. For example, let’s consider a baseball player’s batting average over multiple seasons. Initially, the average might fluctuate widely due to the player’s varying performance. However, as the number of games increases, the batting average exhibits less variability and approaches a stable limit, possibly reflecting the player’s true talent level. The weak convergence of the empirical batting average to a theoretical expected average illustrates how Donsker’s Theorem underpins our understanding of performance metrics in sports, driving decisions for players, teams, and fans alike.

Ultimately, these examples showcase how the abstract theory of weak convergence has tangible implications in fields ranging from finance to environmental science and sports analytics. The bridge between theory and practice not only enhances our understanding of complex concepts but also empowers decision-making across various domains, providing a rich context for appreciating the applications of Donsker’s Theorem.

Common Misconceptions About Donsker’s Theorem

Understanding Donsker’s Theorem can be challenging, especially given its implications in probability theory and statistics. One prevalent misconception is that weak convergence, as described by Donsker’s Theorem, is simply a weaker form of convergence compared to strong convergence. While “weak” does imply a less stringent requirement, it does not mean that the principles of weak convergence lack importance or robustness. In fact, many statistical practices, including estimation and inference, rely on these weak convergence concepts to justify using empirical distributions to make inferences about populations.

Another common misunderstanding is the belief that Donsker’s Theorem applies only to sequences of distributions that converge to normal distributions. Although the theorem is famously applied to the convergence of empirical processes to Brownian motion, its scope extends beyond this. It is essential to recognize that Donsker’s Theorem speaks to any family of probability distributions that converge in distribution under specific conditions, often involving tightness and the construction of appropriate subsequences.

Additionally, many people overlook the role of subsequences in applying Donsker’s Theorem effectively. Some assume that only the entire sequence needs to converge weakly, neglecting the critical nature of considering subsequences for convergence. This nuanced point is vital because it aids in demonstrating that weak limits can be reached even when not every part of a sequence behaves uniformly. For instance, in statistical sequences derived from real-world data, certain patterns may emerge only within subsequences, revealing insights not apparent from examining the entire dataset holistically.

In summary, clarifying these misconceptions about weak convergence and the application of Donsker’s Theorem allows practitioners and researchers to harness its true power in statistical analysis. Emphasizing the theorem’s broad applicability, the significance of subsequences, and its foundational role in deriving statistical properties will enable a deeper understanding and more effective application of these concepts in various fields, from finance to environmental science.

Advanced Topics: Tightness and Relative Compactness

Understanding the concepts of tightness and relative compactness is essential when delving into Donsker’s Theorem and weak convergence. These ideas serve as foundational blocks that clarify how sequences of probability measures behave, particularly in establishing convergence criteria. Tightness, in a broad sense, refers to the idea that a family of probability measures does not “escape” to infinity. More formally, a sequence of probability measures is called tight if for every ε > 0, there exists a compact set K such that the total measure of the complement of K is less than ε for all measures in the sequence. This concept becomes particularly significant in the context of weak convergence, where one needs to ensure that the probability measures converge in distribution while maintaining control over their behavior throughout the entire sequence.

Relative compactness goes one step further, providing a more rigorous framework for understanding the convergence of sequences of measures. A set of probability measures is relatively compact if every sequence of measures within this set has a subsequence that converges weakly. This notion is crucial for applying Donsker’s Theorem, as it lays down the necessary conditions under which empirical measures (or other types of measures) can converge to a limit, typically a well-understood limit such as a normal distribution. Practitioners often focus on the relative compactness of the tight family of distributions to guarantee that the empirical distribution converges, thus connecting theoretical principles with practical statistical implementations.

Practical Implications of Tightness and Relative Compactness

Understanding these concepts allows researchers to avoid common pitfalls when working with large datasets and empirical distributions. For example, consider a case in which a researcher is analyzing the behavior of a stock price over numerous time intervals. If the researcher uses empirical data to form distributions without checking for tightness, they may mistakenly conclude that the empirical distributions converge to a normal distribution when, in fact, they are diverging or not exhibiting proper behavior at infinity. This can lead to incorrect statistical inferences and predictions. Thus, ensuring that distributions are tight is not merely an academic exercise; it significantly impacts the validity of the entire analytical process.

A common approach to demonstrating tightness involves employing Prokhorov’s theorem, which provides a powerful criterion for tightness based on the convergence of measures in compact metric spaces. This theorem facilitates practical applications in various fields; for instance, in finance, it ensures that models using stochastic processes can reliably use empirical measures to predict future behaviors based on historical data. As researchers learn to navigate tightness and relative compactness, they arm themselves with robust tools for ensuring that weak convergence under Donsker’s Theorem properly applies, leading to more accurate and meaningful statistical analysis.

How to Prove Donsker’s Theorem Step-by-Step

To prove Donsker’s Theorem is to unlock a cornerstone of probability theory, revealing how the convergence of empirical processes leads to the Central Limit Theorem (CLT). The theorem states that given a sequence of i.i.d. random variables normalized in a specific way, the empirical distribution converges weakly to a Brownian motion. This proof hinges on careful construction and application of several key principles in measure theory and probability.

An effective approach begins by clearly defining the empirical distribution, which is derived from the normalized sum of iid random variables. Start with the sequence of random variables, (X1, X2, ldots, Xn), each with a common distribution function (F). The empirical distribution function is given by:

[

Fn(x) = frac{1}{n} sum{i=1}^{n} I{[−infty, x]}(Xi)

]

where (I) is the indicator function. As (n) approaches infinity, the aim is to show that (Fn) converges weakly to the cumulative distribution function of a standard normal variable, usually denoted as (Z). This step involves proving the tightness of the measures-showing that empirical measures do not diverge to infinity.

Constructing the Proof

- Tightness Argument: Utilize Prokhorov’s theorem, which states that tight sequences of probability measures on Polish spaces have weakly convergent subsequences. Demonstrating tightness is crucial since it provides the required condition for subsequential limits which may converge weakly.

- Convergence in Distribution: To show convergence to the Brownian motion, transform your empirical measures into a sequence of process-like structures. A common technique is to establish that:

[

sqrt{n}(Fn(x) – F(x)) Rightarrow mathcal{N}(0, sigma^2)

]

where (mathcal{N}(0, sigma^2)) denotes a normal distribution with zero mean and variance (sigma^2). The proof typically employs characteristic functions or Fourier transforms, leveraging their convergence properties to ease the analysis of distributions rather than random variables directly.

- Use of Continuous Mapping Theorem: The functional forms of weak convergence can be solidified through applying continuous mappings. For instance, if you can show a continuous function of (Fn) converges to a function of (Z), you can apply the Continuous Mapping Theorem, which implies convergence holds for the function of the random variables.

Practical Application

In a practical context, consider data obtained from repeated experiments in a laboratory setting. Each experiment generates a sample that might appear erratic. By following the proof steps outlined, researchers can confidently apply Donsker’s Theorem; they would recognize that despite the apparent chaos in individual results, the aggregated data behaves predictably as it grows large-approaching normality. This insight is invaluable in statistics and inferential reasoning, lending credence to predictions made from empirical data.

By decomposing the proof into these structured steps, you empower yourself and your colleagues to grapple with the complexities of probability. This understanding not only enhances theoretical insights but also bolsters practical applications across fields relying on statistical inference, such as finance, healthcare, and social sciences.

The Role of Central Limit Theorem in Weak Convergence

The Central Limit Theorem (CLT) is often hailed as one of the most significant results in probability theory, laying the groundwork for understanding how averages of samples behave. It asserts that, given a sufficiently large sample size from any distribution with a finite mean and variance, the distribution of the sample means will approach a normal distribution, regardless of the shape of the original distribution. This remarkable trait is intricately linked to weak convergence and is fundamental to grasping Donsker’s Theorem.

Donsker’s Theorem effectively extends the CLT to the realm of empirical processes, characterizing how the empirical distribution function converges weakly to a Brownian motion. This relationship provides a robust framework for understanding not just the behavior of averages, but also the dynamic processes that underlie statistical methods in fields ranging from economics to biology. As such, recognizing the role of the CLT in establishing weak convergence is essential for any serious work in statistical theory or application.

Connecting CLT with Weak Convergence

To see this connection, let’s consider how the empirical distribution functions ( F_n ) defined earlier relate to the CLT. When we normalize the empirical distribution – that is, when we standardize it by subtracting the mean and dividing by the standard deviation – we observe that the fluctuations of these empirical distributions mirror the behavior of Brownian motion in the limit of large sample sizes. Specifically, as ( n ) approaches infinity, the sequence:

[

sqrt{n}(F_n(x) – F(x))

]

converges in distribution to a Gaussian (normal) process. This result encapsulates both the intuitive idea of convergence in terms of averages and the more nuanced concept of convergence in the space of distributions or processes.

Moreover, as researchers apply Donsker’s Theorem in practical contexts – say in the analysis of climate data or financial returns – they rely on this foundational connection to understand how empirical data can be treated and interpreted under the assumption of normality as sample sizes increase. This leads to more robust statistical modeling and inference.

Practical Implications

Understanding how Donsker’s Theorem relies on the CLT provides deeper insights into empirical processes’ behavior in numerous applications. For instance, in quality control processes, the average outcomes from a large number of tests must be treated with the principles of these foundational theorems to ensure reliability. Similarly, in clinical trials, when analyzing the effects of a new treatment over numerous trials, the assumption that the averages converge to normality allows practitioners to apply various statistical techniques confidently.

In summary, the interplay between the Central Limit Theorem and weak convergence is not just theoretical but has tangible implications across many fields. As researchers delve into more complex models, understanding these relationships enhances their ability to make informed decisions based on empirical data, reinforcing the critical importance of these mathematical foundations in practical applications.

Implications for Practical Statistical Analysis

The ability to understand and effectively apply Donsker’s Theorem in practical statistical analysis can dramatically enhance the reliability of empirical research across multiple disciplines. By framing the convergence of empirical processes within the context of weak convergence, researchers can leverage the robustness of the theorem to glean insights from data that may initially appear erratic or non-normal. This becomes particularly valuable in fields such as finance, biostatistics, and social sciences, where decision-making often hinges on interpreting sample data accurately.

One of the immediate implications of Donsker’s Theorem is its utility in validating the asymptotic normality of empirical measures. When researchers collect large datasets-whether from longitudinal studies or market trends-they can utilize Donsker’s insights to affirm that their empirical distribution functions will approximate a Brownian motion as sample sizes increase. This convergence ensures that common statistical methods, including regression analysis, hypothesis testing, and confidence interval estimation, remain valid even when the underlying population distribution is not normally distributed. Practitioners can be more confident in their inferential procedures when they know that the additional assumptions required for traditional parametric tests can be relaxed due to the insights offered by Donsker’s Theorem.

Furthermore, the application of Donsker’s Theorem extends to advanced methodologies such as bootstrapping, where the principles of weak convergence underpin the sampling distributions of various estimators. In this context, resampling methods can effectively approximate the distributions of statistics from small samples by utilizing empirical processes, reaffirming the theoretical underpinnings provided by Donsker’s framework. Many researchers now incorporate these ideas into algorithms that assist in areas like machine learning and predictive analytics, enhancing model robustness by accommodating the nuances of real-world data.

In more specialized applications, Donsker’s Theorem is instrumental in constructing generalized methods of moments (GMM), a widely used estimation technique in econometrics. By establishing the weak convergence of moment conditions, econometricians can derive more efficient estimators that are less sensitive to deviations from standard distributional assumptions. This fosters enhanced precision and reliability in economic modeling, allowing policymakers and analysts to make informed decisions supported by rigorous statistical backing. As such, understanding and applying Donsker’s Theorem is not merely an academic exercise; it represents a cornerstone of modern statistical practice that can significantly impact decision-making across various sectors.

Further Reading: Publications on Donsker’s Theorem

Donsker’s Theorem serves as a foundation for understanding weak convergence in probability theory, providing a critical link between empirical processes and Brownian motion. For those eager to delve deeper into this theorem and its multifaceted applications, there is a wealth of literature available. Engaging with these texts will not only enhance your theoretical understanding but also equip you with practical tools that inform real-world statistical analysis.

Key publications include “Convergence of Probability Measures” by Patrick Billingsley, which offers an accessible introduction to the concept of weak convergence and its implications in probability theory, including Donsker’s Theorem. This book is particularly valuable for readers seeking a blend of rigorous mathematical treatment and intuitive explanations. Another crucial resource is “Empirical Processes for Statistics” by Gábor Lugosi and Shahar Mendelson, which discusses the applications of Donsker’s Theorem in statistical learning theory, providing examples that bridge the gap between theory and practical applications.

For those interested in more advanced approaches, consider exploring papers such as “Weak Convergence and Applications” by David Pollard. This work explores the interplay between weak convergence concepts and various statistical methods, offering insights into bootstrapping and generalized methods of moments. Furthermore, “The Central Limit Theorem for Dependent Random Variables” by R. M. Dudley presents a nuanced view of how Donsker’s insights relate to conditions under which empirical processes converge, highlighting its relevance in modern statistical frameworks.

As you engage with these publications, you’ll gain a comprehensive understanding of Donsker’s Theorem, solidifying its role as a pivotal concept in both theoretical and applied statistics. Whether your focus is on empirical processes, applications in econometrics, or advancements in statistical methodologies, these resources will arm you with the knowledge needed to navigate the complexities of weak convergence and its significance in contemporary research.

Faq

markdown

Q: What are the assumptions of Donsker's Theorem in weak convergence?

A: Donsker's Theorem relies on several assumptions, primarily that the sequence of random variables converges in distribution. Additionally, these variables must be normalized to have mean zero and finite variance. Understanding these assumptions is crucial for applying the theorem effectively in statistical contexts.

Q: How does Donsker's Theorem relate to empirical processes?

A: Donsker's Theorem establishes a connection between empirical processes and the Brownian motion limit. It implies that the empirical distribution function converges weakly to a Gaussian process, highlighting its importance in statistical inference. For a deeper dive, refer to the section on key applications of the theorem.

Q: What is the role of tightness in Donsker's Theorem?

A: Tightness is essential in ensuring that a sequence of probability measures has converging subsequences. A set of measures is tight if, for every ε > 0, there's a compact set such that the measures of the complement are less than ε. This concept is pivotal for applying Donsker's Theorem effectively in probability theory.

Q: Can Donsker's Theorem be applied to non-iid random variables?

A: Yes, Donsker's Theorem can be extended to certain classes of non-iid (independent and identically distributed) random variables, provided specific conditions regarding their correlation and behavior are met. This expansion is crucial for broader applications in statistics, especially in real-world scenarios.

Q: Why is the Central Limit Theorem important for understanding Donsker's Theorem?

A: The Central Limit Theorem (CLT) serves as a foundation for Donsker's Theorem, as it describes how sample averages of random variables converge in distribution to a normal distribution. This relationship emphasizes the subtleties of weak convergence, making the understanding of one theorem instrumental for comprehending the other.

Q: What are some practical implications of Donsker's Theorem in statistics?

A: Donsker's Theorem has significant implications in non-parametric statistics, particularly in the construction of confidence intervals and hypothesis testing. Its applications extend to areas like bootstrap methods, where understanding weak convergence enhances the robustness of statistical inferences.

Q: How can one visualize the weak convergence depicted in Donsker's Theorem?

A: Visualization of weak convergence can be achieved through graphical representations of random variable distributions converging to a limiting distribution, often shown via empirical distribution functions trending towards a normal curve. Refer to our visualization section for detailed diagrams and explanations.

Q: What are common challenges in applying Donsker's Theorem?

A: One challenge in applying Donsker's Theorem is ensuring that the underlying conditions of tightness and uniform convergence are satisfied. Additionally, practitioners might struggle with properly normalizing data before applying the theorem. Familiarity with these challenges can enhance practical usage in statistical analysis.

To Conclude

Thank you for exploring “Donsker’s Theorem: Weak Convergence and Subsequences” with us! You’ve delved into a fundamental concept that bridges probability and analysis, highlighting how weak convergence plays a pivotal role in understanding stochastic processes. To deepen your understanding, consider checking out our related articles on Central Limit Theorem and Convergence in Distribution, which provide further insights and examples that can enhance your grasp of these topics.

Don’t miss the opportunity to apply these insights! Join our community by subscribing to our newsletter for the latest research updates and practical applications in probability theory. If you have questions or insights to share, feel free to leave a comment below. Your engagement matters to us, and we encourage you to explore more of our resources that help illuminate complex concepts in a digestible manner. Your journey in mastering probability continues here-let’s explore together!